(Auszug aus der Pressemitteilung)

With all tech hardware industry leaders committing to producing dedicated AI hardware, there’s a need for objective tools that measure a device or dedicated accelerator’s ability to perform AI tasks.

Currently, there are many different inference engines, each with its own advantages and supported hardware. This means that for a benchmark to accurately measure hardware’s performance, it must also support a variety of engines and settings.

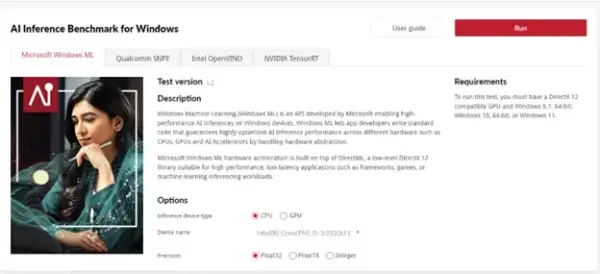

The Procyon AI Inference Benchmark for Windows supports AI Inference Engines from all the leading technology companies, including:

- Microsoft® WindowsML

- Qualcomm® SNPE

- Intel® OpenVINO™

- NVIDIA® TensorRT™

Swapping between a system’s supported AI Inference Engines in Procyon is as easy as swapping between tabs in the UI. You can also choose between supported components in your system to measure performance differences between them.

Many new systems have several processing units capable of running an AI task. The Procyon AI Inference Benchmark for Windows lets you select the inference device type so you can demonstrate the performance differences when running AI inference tasks on CPUs, GPUs, and dedicated AI accelerators (e.g., Intel NPUs or Qualcomm HTPs).

Many leading technology companies are already using the Procyon AI Inference Benchmark to show off the performance benefits of their powerful new AI hardware, and Procyon will be ready for the next generation of hardware from other manufacturers coming in the future.

Neueste Kommentare

19. April 2024

17. April 2024

17. April 2024

5. April 2024

23. März 2024

22. März 2024